Understanding LLM Biases in Quantitative Research

From Lunch Conversation to Framework

Yesterday's lunch conversation with an LLM Expert Engineer sparked a fascinating exploration into the parallels between human cognitive biases and those exhibited by Large Language Models (LLMs) in quantitative research. What began as a technical discussion about how LLMs process information through sharding and vectorization evolved into a deeper investigation of bias in artificial intelligence.

The Genesis

During our lunch discussion, the engineer explained how LLMs break down information into vectors - essentially creating a multi-dimensional space where related concepts cluster together. This process, while powerful, inherently creates potential for systematic biases. Just as human brains create mental shortcuts and associations that can lead to cognitive biases, LLMs develop their own patterns of association through their vector spaces.

This morning, Andre Profitt posed what seemed like a simple question on social media: "What biases did you find in the models?" This prompted me to engage in an extensive analysis with Claude, exploring the various ways biases manifest in quantitative investment research when using LLMs.

The Revelation

What emerged was a striking realization: while LLMs and humans often exhibit similar biases, the underlying mechanisms are fundamentally different. For instance, where humans might develop confirmation bias through emotional investment in their ideas, LLMs can display similar behavior through the reinforcement of patterns in their training data and vector space relationships.

This led to several key insights:

Vector Space Dynamics: The way LLMs shard and vectorize information can create systematic biases in how they process and relate financial concepts.

Pattern Recognition Differences: Unlike human intuitive pattern recognition, LLMs rely on statistical relationships in their vector spaces, which can lead to different types of analytical biases.

Complementary Strengths: Understanding these differences opens opportunities to leverage the complementary strengths of human and machine intelligence in quantitative research.

A Framework Emerges

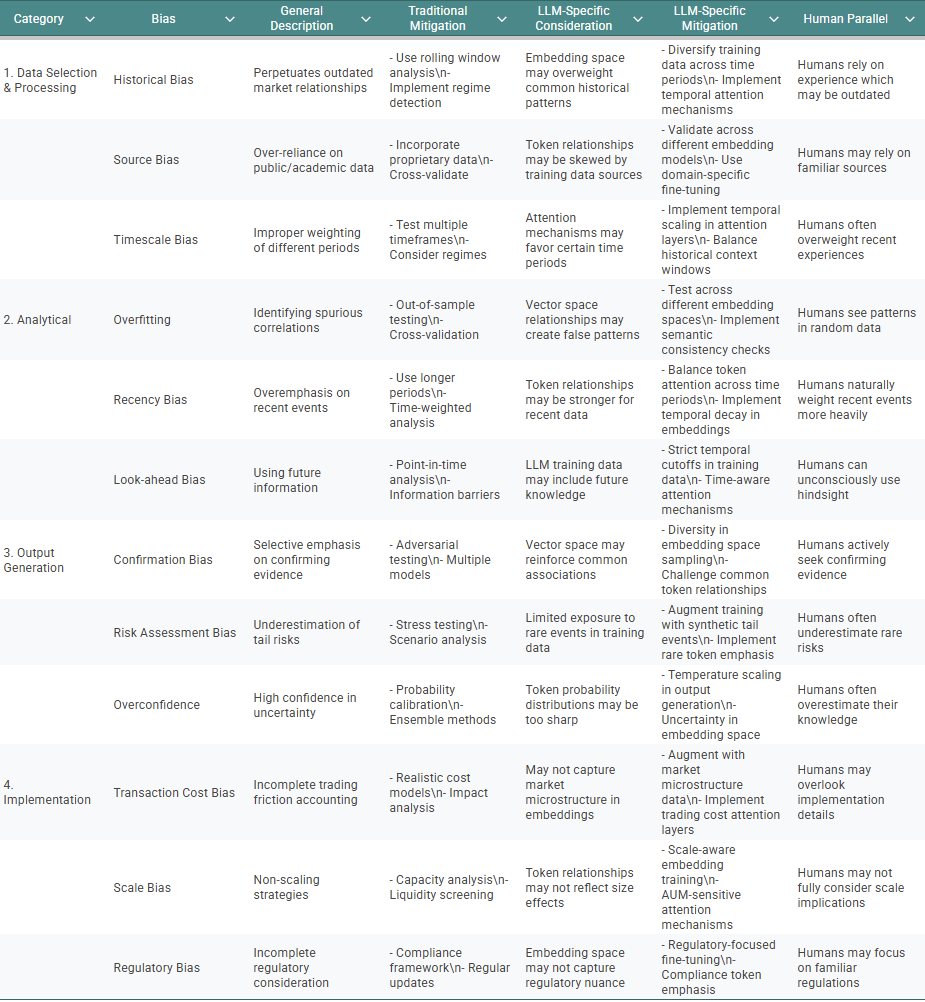

Through this exploration, it became clear that we needed a comprehensive framework to understand and address these biases. Working with Claude, we developed a detailed analysis that maps out traditional biases, their LLM-specific manifestations, and corresponding mitigation strategies.

The framework we developed goes beyond simple bias identification, incorporating:

Parallel analysis of human and LLM biases

Specific considerations for LLM vector space relationships

Practical mitigation strategies for both traditional and LLM-specific biases

Implementation guidelines for real-world applications

Special Considerations for Pattern Recognition

Statistical vs. Intuitive:

LLMs: Pattern recognition through vector space statistics

Humans: Pattern recognition through experience and intuition

Integration: Combine statistical rigor with human intuition

Memory Processing:

LLMs: Attention mechanisms across token relationships

Humans: Associative memory with creative connections

Integration: Use LLMs for broad pattern identification, humans for novel insights

Bias Awareness:

LLMs: Require explicit programming for bias detection

Humans: Can consciously recognize and adjust for biases

Integration: Implement systematic checks with human oversight

Implementation Guidelines

Regular Review Process:

Monitor both traditional and LLM-specific biases

Update mitigation strategies as LLM technology evolves

Document bias incidents and successful mitigations

Cross-Validation Framework:

Compare LLM outputs with traditional methods

Validate across different LLM architectures

Incorporate human expert review

Continuous Improvement:

Regular retraining with updated data

Refinement of bias detection methods

Evolution of mitigation strategies

What makes this framework particularly valuable is its recognition that effective bias mitigation in quantitative research requires understanding both the similarities and differences between human and LLM biases. It's not enough to simply apply traditional bias mitigation strategies to LLMs - we need to understand and address the unique ways these biases manifest in artificial intelligence.

Looking Forward

As we continue to integrate LLMs into quantitative research processes, understanding these biases becomes increasingly critical. This framework serves as a starting point for developing more robust and reliable research methodologies that leverage the strengths of both human and artificial intelligence while mitigating their respective weaknesses.

Already implemented some of your framework suggestions into my investment risk modelling. Look forward to more collaboration!